3Making Light Work in BiologyBasic, Foundational Detection and Imaging Techniques Involving Ultraviolet, Visible, and Infrared Electromagnetic Radiation Interactions with Biological Matter

I don’t suppose you happen to know

Why the sky is blue?…

Look for yourself. You can see it’s true.

—John Ciardi (American poet 1916–1986)

General Idea: Here, we discuss the broad range of experimental biophysical techniques that primarily act through the detection of biological components using relatively routine techniques, which utilize basic optical photon absorption and emission processes and light microscopy, and similar methods that extend into the ultraviolet (UV) and infrared (IR) parts of the electromagnetic spectrum. These include methods to detect and image cells and populations of several cells, as well as subcellular structures, down the single-molecule level both for in vitro and in vivo samples. Although the techniques are ubiquitous in modern biophysics labs, they are still robust, have great utility in addressing biological questions, and have core physics concepts at their heart that need to be understood.

3.1 Introduction

Light microscopy, invented over 300 years ago, has revolutionized our understanding of biological processes. In its modern form, it involves much more than just the magnification of images in biological samples. There are invaluable techniques that have been developed to increase the image contrast. Fluorescence microscopy, in particular, is a very useful tool for probing biological processes. It results in high signal-to-noise ratios (SNRs) for determining the localization of biological molecules tagged with a fluorescent dye but does so in a way that is relatively noninvasive. This minimal perturbation to the native biology makes it a tool of choice in many biophysical investigations.

There has been enormous development of visible (VIS) light microscopy tools, which address biological questions at the level of single cells in particular, due in part to a bidirectional development in the operating range of sample length scales over recent years. Top-down improvements in in vivo light microscopy technologies have reduced the scale of spatial resolution down to the level of single cells, while bottom-up optimization of many emerging single-molecule light microscopy methods originally developed for in vitro contexts has been applied now to living cells.

3.2 Basic UV-VIS-IR Absorption, Emission, and Elastic Light Scattering Methods

Before we discuss the single-molecule light microscopy approaches, there are a number of basic spectroscopy techniques that are applied to bulk in vitro samples, which not only primarily utilize VIS light but also extend into UV and IR. Some of these may appear mundane at first sight, but in fact they hold the key to generating many preliminary attempts at robust physical quantification in the biosciences.

3.2.1 Spectrophotometry

In essence, a spectrophotometer (or spectrometer) is a device containing a photodetector to monitor the transmittance (or conversely the reflectance) of light through a sample as a function of wavelength. Instruments can have a typical wavelength range from the long UV (~200–400 nm) through to the VIS (~400–700 nm) up into the mid and far IR (~700–20,000 nm) generated from one or more broadband sources in combination with wavelength filters and/or monochromators. A monochromator uses either optical dispersion or diffraction in combination with mechanical rotation to select different wavelengths of incident light. Light is then directed through a solvated sample that is either held in a sample cuvette or sandwiched between transparent mounting plates. They are generally made from glass or plastic for VIS, sodium chloride for IR, or quartz for UV to minimize plate/cuvette absorption at these respective wavelengths. Incident light can be scanned over a range of wavelengths through the sample to generate a characteristic light absorption spectral response.

Scanning IR spectrophotometers exclusively scan IR wavelengths. A common version of this is the Fourier transform infrared (FTIR) spectrometer, which, instead of selecting one probe wavelength at any one time as with the scanning spectrophotometer, utilizes several in one go to generate a polychromatic interference pattern from the sample, which has some advantage in terms of SNR and spectral resolution.

The absorption signal can then be inverse Fourier transformed to yield the IR absorption spectrum. Such spectra can be especially useful for identifying different organic chemical motifs in samples, since the vibrational stretch energy of the different covalent bonds found in biomolecules corresponds to IR wavelengths and will be indicated by measurable absorption peaks in the spectrum. The equivalent angular frequency for IR absorption, ω, can be used to estimate the mean stiffness of a covalent bond (a useful parameter in molecular dynamics simulations, see Chapter 8), by modeling it as a simple harmonic oscillator of two masses m1 and m2 (representing the masses of the atoms either end of the bond) joined by a spring of stiffness kr:

(3.1)where μ is the reduced mass given by

(3.2)Technical experts of IR spectrometers generally do not cite absorption wavelengths but refer instead to wavenumbers in units of cm−1, with the range ~700–4000 cm−1 being relevant to most of the different covalent bonds found in biomolecules, which corresponds to a wavelength range of ~2.5–15 μm. Although broadband IR sources are still sometimes used in older machines, it is more common now to use IR laser sources.

The pattern of IR absorption peaks in the spectrum, their relative position in terms of wavelength and amplitude, can generate a unique signature for a given biochemical component and so can be invaluable for sample characterization and purity analysis. The principal drawback of IR spectroscopy is that water exhibits an intense IR absorption peak and samples need to be in a dehydrated state. IR absorption ultimately excites a transition between different bond vibrational states in a molecule (Figure 3.1a), which implies a change in electrical dipole moment, due to either electrical polar asymmetry of the atoms that form the bond or the presence of delocalized electronic molecular orbitals (Table 3.1).

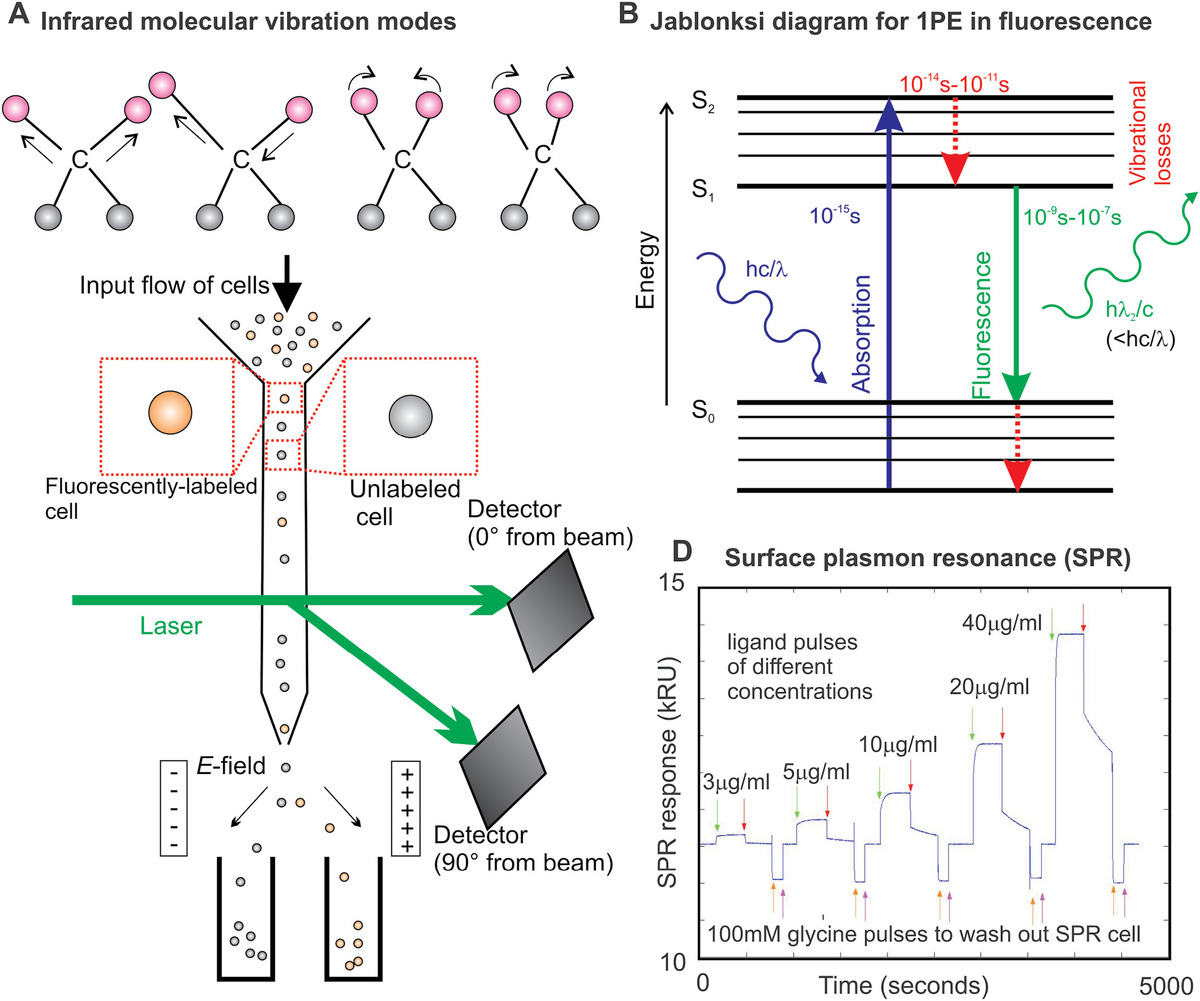

Figure 3.1 Simple spectroscopy and fluorescence excitation. (a) Some typical vibrational modes of a carbon atom–centered molecular motif that are excited by infrared absorption. (b) Jablonski energy level transition diagram for single-photon excitation resulting in fluorescence photon emission, characteristic time scales indicated. (c) Schematic of a typical fluorescence-assisted cell sorting device. (d) Typical output response from an SPR device showing injection events of a particular ligand, followed by washes and subsequent ligand injections into the SPR chamber of increasing concentration.

| Peak IR Absorption Range (cm−1) | Bond in Biomolecule |

|---|---|

| 730–770 | C—H |

| 1180–1200 | C—O—C |

| 1250–1340 | C—N |

| 1500–1600 | C=C |

| 1700–1750 | C=O |

| 2500–2700 (and other peaks) | O—H |

| 3300–3500 (and other peaks) | N—H |

Conventional FTIR is limited by a combination of factors including an inevitable trade-off between acquisition time and SNR and the fact that there is no spatial localization information. However, recent developments have utilized multiple intense, collimated IR beams from synchrotron radiation (see Chapter 5). This approach permits spatially extended detection of IR absorption signals across a sample allowing diffraction-limited time-resolved high-resolution chemical imaging, which has been applied to tissue and cellular samples (Nasse et al., 2011).

Many biomolecules that contain chemical bonds that absorb in the IR will also have a strong Raman signal. The Raman effect is one of the inelastic scattering of an excitation photon by a molecule, resulting in either a small increase or decrease in the wavelength of the scattered light. There is a rough mutual exclusion principle, in that strong absorption bands in the IR correspond to relatively weak bands in a Raman spectrum, and vice versa. Raman spectroscopy is a powerful biophysical tool for generating molecular signatures, discussed fully in Chapter 4.

Long UV light (~200–400 nm) is also a useful spectrophotometric probe especially for determining proteins and nucleic acid content in a sample. Peptide bonds absorb most strongly at ~280 nm wavelength, whereas nucleic acids such as RNA and DNA have a peak absorption wavelength of more like ~260 nm. It is common therefore to use the rate of absorption at these two wavelengths as a metric for protein and/or nucleic acid concentration. For example, a ratio of 260/280 absorbance of ~1.8 is often deemed as “pure” by biochemists for DNA, whereas a ratio of ~2.0 is deemed “pure” for RNA. If this ratio is significantly lower, it often indicates the presence of protein (or potentially other contaminants such as phenol that absorb strongly at or near 280 nm). With suitable calibration, however, the 260 and 280 nm absorption values can be used to determine the concentrations of nucleic acids and proteins in the absence of sample contaminants.

In basic spectrophotometers, the transmitted light intensity from the sample is amplified and measured by a photodetector, typically a photodiode. More expensive machines will include a second reference beam using an identical reference cuvette with the same solvent (generally water, with some chemicals to stabilize the pH) but no sample, which can be used as a baseline against which to reference the sample readings. This method finds utility in measuring sample density containing relatively large biological particulates (e.g., cells in suspension, to determine the so-called growth stage) to much smaller ones, such as molecules in solution.

To characterize attenuation, if we assume that the rate absorption of light parallel to the direction of propagation, say z, in an incrementally small slice through the sample is proportional to the total amount of material in that thin slice multiplied by the incident light intensity I(z), then it is trivial to show for a homogeneous tissue:

(3.3)This is called the “Beer–Lambert law,” a very simple model that follows from the assumption that the drop in light intensity upon propagating through a narrow slice of sample is proportional to the incident intensity and the slice’s width. It is empirically obeyed up to high scatterer concentrations beyond which electrostatic interactions between scatterers become significant. Here, σ is the mean absorption cross-sectional area of the tissue, which depends on the wavelength λ, and C is the concentration of the absorbing molecules. The absorbance A is often a useful quantity, defined as

(3.4)For a real tissue over a wide range of z, there may be heterogeneity in terms of the types of molecules, their absorption cross-sectional areas, and their concentrations. The Beer–Lambert law can be utilized to measure the concentration of a population of cells. This is often cited as the optical density (OD) measurement, such that

(3.5)where L is the total path length over which the absorbance measurement was made. Many basic spectrophotometers contain a cuvette, which is standardized at L = 1 cm, and so it is normal to standardize OD measurements on the assumption of a 1 cm path length.

Note that the absorbance measured from a spectrophotometer is not exclusively due to photon absorption processes as such, though photon absorption events may contribute to the reduction in transmitted light intensity, but rather scattering. In simple terms, general light scattering involves an incident photon inducing an oscillating dipole in the electron molecular orbital cloud, which then reradiates isotropically. The measured reduction in light intensity in passing through a biological sample in a standard VIS light spectrophotometer is primarily due to elastic scattering of the incident light. For scattering particles, the size of single cells is at least an order of magnitude greater than the wavelength of the incident light; this phenomenon is due primarily to Mie scattering. More specifically, this is often referred to as “Tyndall scattering”: Mie scattering in a colloidal environment in which the scattering particles may not necessarily be spherical objects. A good example is the rod-shaped bacteria cells. Differences in scatterer shape results in apparent differences in OD; therefore, caution needs to be applied in ensuring that like is compared to like in terms of scatterer shape when comparing OD measurements, and if not, then a shape correction factor should be applied. Note that some absorbance spectrometers are capable of correcting for scattering effects.

These absorbance measurements are particularly useful for estimating the density of growing microbial cultures, for example, with many bacteria an OD unit of 1.0 taken at a conventional wavelength of 600 nm corresponds to ~108 cells mL−1, equivalent to a typical cloudy looking culture when grown to “saturation.” Spectrophotometry can be extended into colorimetry in which an indicator dye is present in the sample, which changes color upon binding of a given chemical. This can then be used to report whether a given chemical reaction has occurred or not, and so monitoring the color change with time will indicate details of the kinetics of that chemical reaction.

3.2.2 Fluorimetry

A modified spectrophotometer called a fluorimeter (or fluorometer) can excite a sample with incident light over a narrow band of wavelengths and capture fluorescence emissions. For bulk ensemble average in vitro fluorimetry investigations, several independent physical parameters are often consolidated for simplicity into just a few parameters to characterize the sample. For example, the absorption cross-section for a fluorescent sample is related to its extinction coefficient ε (often cited in non-SI units of M−1 cm−1) and the molar concentration of the fluorophore cm by

(3.6)Therefore, the Beer–Lambert law for a fluorescent sample can be rewritten as

(3.7)The key physical process in bulk fluorimetry is single-photon excitation, that is, the processes by which energy from one photon of light is absorbed and ultimately emitted as a photon of lower energy. The process is easier to understand when depicted as a Jablonski diagram for the various energy level transitions involved (Figure 3.1b). First, the photon absorbed by the electron shells of an atom of a fluorophore (a fluorescent dye) causes an electronic transition to a higher energy state, a process that takes typically ~10−15 s. Vibrational relaxation due to internal conversion (in essence, excitation of an electron to a higher energy state results in a redistribution of charge in the molecular orbitals resulting in electrostatically driven oscillatory motion of the positively charged nucleus) relative movements can then occur over typically 10−12 to 10−10 s, resulting in an electronic energy loss. Fluorescence emission then can occur following an electronic energy transition back to the ground state over ca. 10−9 to 10−6 s, resulting in the emission of a photon of light of lower energy (and hence longer wavelength) than the excitation light due to the vibrational losses.

In principle, an alternative electronic transition involves the first excited triplet state energy level reached from the excited state via intersystem crossing in a classically forbidden transition from a net spin zero to a spin one state. This occurs over longer time scales than the fluorescence transition, ca. 10−3–100 s, and results in emission of a lower-energy phosphorescence photon. This process can cause discrete photon bunching over these time scales, which is not generally observed for typical data acquisition time scales greater than a millisecond as it is averaged out. However, there are other fluorescence techniques using advanced microscopy in which detection is performed over much faster time scales, such as fluorescence lifetime imaging microscopy (FLIM) discussed later in this chapter, for which this effect is relevant.

The electronic energy level transitions of ground-state electron excitation to excited state, and from excited state back to ground state, are vertical transitions on the Jablonski diagram. This is due to the quantum mechanical Franck–Condon principle that implies that the atomic nucleus does not move during these two opposite electronic transitions and so the vibration energy levels of the excited state resemble those of the ground state. This has implications for the symmetry between the excitation and emission spectra of a fluorophore.

But for in vitro fluorimetry, a cuvette of a sample is excited into fluorescence often using a broadband light source such as a mercury or xenon arc lamp with fluorescence emission measured through a suitable wavelength bandwidth filter at 90° to the light source to minimize detection of incident excitation light. Fluorescence may either be emitted from a fluorescent dye that is attached to a biological molecule in the sample, which therefore acts as a “reporter.” However, there are also naturally fluorescent components in biological material, which have a relatively small signal but which can be measurable for in vitro experiments, which often include purified components at greater concentrations that occur in their native cellular environment.

For example, tryptophan fluorescence involves measuring the native fluorescence of the aromatic amino acid tryptophan (see Chapter 2). Tryptophan is very hydrophobic and thus is often buried at the center of folded proteins far away from surrounding water molecules. On exposure to water, its fluorescence properties change, which can be used as a metric for whether the protein is in the folded conformational state. Also, chlorophyll, which is a key molecule in plants as well as many bacteria essential to the process of photosynthesis (see Chapter 2), has significant fluorescent properties.

Note also that there is sometimes a problematic issue with in vitro fluorimetry known as the “inner filter effect.” The primary inner filter effect (PIFE) occurs when the absorption of a fluorophore toward the front of the cuvette nearest the excitation beam entry point reduces the intensity of the beam experienced by fluorophores toward the back of the cuvette and so can result in apparent nonlinear dependence of measured fluorescence with sample concentration. There is also a secondary inner filter effect (SIFE) that occurs when the fluorescence intensity decreases due to fluorophore absorption in the emission region. PIFE in general is a more serious problem than SIFE because of the shorter wavelengths for excitation compared to emission. To properly correct these effects requires a controlled titration at different fluorophore concentrations to fully characterize the fluorescence response. Alternatively, a mathematical model can be approximated to characterize the effect. In practice, many researchers ensure that they operate in a concentration regime that is sufficiently low to ignore the effect.

3.2.3 Flow Cytometry and Fluorescence-Assisted Cell Sorting

The detection of scattered light and fluorescence emissions from cell cultures are utilized in powerful high-throughput techniques of flow cytometry and fluorescence-assisted cell sorting (FACS). In flow cytometry, a culture of cells is flowed past a detector using controlled microfluidics. The diameter of the flow cell close to the detector is ~10−5 m, which ensures that only single cells flow past the detector at any one time. In principle, a detector can be designed to measure a variety of different physical parameters of the cells as they flow past, for example, electrical impedance and optical absorption. However, by far the most common detection method is based on focused laser excitation of cells in the vicinity of a sensitive photodetector, which measures the fluorescence emissions of individual cells as they flow past.

Modern commercial instruments have several different wavelength laser sources and associated fluorescence detectors. Typically, cells under investigation will be labeled with a specific fluorescent dye. The fluorescence readout from flow cytometry can therefore be used as a metric for purity of subsequent cell populations, that is, what proportion of a subsequent cell culture contains the original labeled cell. A common adaptation of flow cytometry is to incorporate the capability to sort cells on the basis of their being fluorescently labeled or not, using FACS. A typical FACS design involves detection of the fluorescence signature with a photodetector that is positioned at 90° relative to the incident laser beam, while another photodetector measures the direct transmission of the light, which is a metric for size of the particle flow past the detector that is thus often used to determine if just a single cell is flowing past as opposed to, more rarely, two or more in the line with the incident laser beam (Figure 3.1c).

Cells are usually sorted into two populations of those that have a fluorescence intensity above a certain threshold, and those that do not. The sorting typically uses rapid electrical feedback of the fluorescence signal to electrostatics plates; the flow stream is first interrupted using piezoelectric transducers to generate nanodroplets, which can be deflected by the electrostatic plates so as to shunt cells into one of two output reservoirs. Other commercial FACS devices use direct mechanical sorting of the flow, and some bespoke devices have implemented methods based on optical tweezers (OTs) (Chapter 6).

FACS results in a very rapid sorting of cells. It is especially useful for generating purity in a heterogeneous cell population. For example, cells may have been genetically modified to investigate some aspect of their biology; however, the genetic modifications might not have been efficiently transferred to 100% of the cells in a culture. By placing a suitable fluorescent marker on only the cells that have been genetically modified, FACS can then sort these efficiently to generate a pure culture output that contains only these cells.

3.2.4 Polarization Spectroscopy

Many biological materials are birefringent, or optically active, often due to the presence of repeating molecular structures of a given shape, which is manifested as an ability to rotate the plane of polarization of incident light in an in vitro sample. In the linear dichroism (LD) and circular dichroism (CD) techniques, spectrophotometry is applied using polarized incident light with a resultant rotation of the plane of polarization of the E-field vector as it propagates through the sample. LD uses a linearly polarized light as an input beam, whereas CD uses circularly polarized light that in general results in an elliptically polarized output for propagation through an optically active sample. The ellipticity changes are indicative of certain specific structural motifs in the sample, which although not permitting fine structural detail to be explored at the level of, for example, atomistic detail, can at least indicate the relative proportions of different generic levels of secondary structure, such as the relative proportions of β-sheet, α-helix, or random coil conformations (see Chapter 2) in a protein sample.

CD spectroscopic techniques display an important difference from LD experiments in that biomolecules in the sample being probed are usually free to diffuse in solution and so have a random orientation, whereas those in LD have a fixed or preferred molecular orientation. A measured CD spectrum is therefore dependent on the intrinsic asymmetric (i.e., chiral) properties of the biomolecules in the solution, and this is useful for determining the secondary structure of relatively large biomolecules in particular, such as biopolymers of proteins or nucleic acids. LD spectroscopy instead requires the probed biomolecules to have a fixed or preferred orientation; otherwise if random molecular orientation is permitted, the net LD effect to rotate the plane of input light polarization is zero.

To achieve this, the preferred molecular orientation flow can be used to comb out large molecules (see Chapter 6) in addition to various other methods including magnetic field alignment, conjugation to surfaces, and capturing molecules into gels, which can be extruded to generate preferential molecular orientations. LD is particularly useful for generating information of molecular alignment on surfaces since this is where many biochemical reactions occur in cells as opposed to free in solution, and this can be used to generate time-resolved information for biochemical reactions on such surfaces.

LD and CD are complementary biophysical techniques; it is not simply that linearly polarized light is an extreme example of circularly polarized light. Rather, the combination of both techniques can reveal valuable details of both molecular structure and kinetics. For example, CD can generate information concerning the secondary structure of a folded protein that is integrated in a cell membrane, whereas LD might generate insight into how that protein inserts into the membrane in the first place.

Fluorescence excitation also has a dependence on the relative orientation between the E-field polarization vector and the transition dipole moment of the fluorescent dye molecule, embodied in the photoselection rule (see Corry, 2006). The intensity I of fluorescence emission from a fluorophore whose transition dipole moment is oriented at an angle θ relative to the incident E-field polarization vector is as follows:

(3.8)In general, fluorophores have some degree of freedom to rotate, and many dyes in cellular samples exhibit in effect isotropic emissions. This means that over the timescale of a single data sampling window acquisition, a dye molecule will have rotated its orientation randomly many times, such that there appears to be no preferential orientation of emission in any given sampling time window. However, as the time scale for sampling is reduced, the likelihood for observing anisotropy, r, that is, preferential orientations for absorption and emission, is greater. The threshold time scale for this is set by the rotational correlation time τR of the fluorophore in its local cellular environment attached to a specific biomolecule. The anisotropy can be calculated from the measured fluorescence intensity, either from a population of fluorophores such as in in vitro bulk fluorescence polarization measurements or from a single fluorophore, from the measured emission intensity parallel or perpendicular (I2) to the incident linear E-field polarization vector after a time t:

(3.9)In fluorescence anisotropy measurements, the detection system will often respond differently to the polarization of the emitted light. To correct for this, a G-factor is normally used, which is the ratio of the vertical polarization detector sensitivity to the horizontal polarization detector sensitivity. Thus, in Equation 3.9, the parameter I2(t) is replaced by GI2(t).

Note that another measure of anisotropy is sometimes still cited in the literature as a parameter confusingly called the polarization, P, such that P = 3r/(2 + r) = (I1 − I2)/(I1 + I2), to be compared with Equation 3.9. The anisotropy decays with time as the fluorophore orientation rotates, such that for freely rotating fluorophores

(3.10)where r0 is called the “initial anisotropy” (also known as the “fundamental anisotropy”), which in turn is related to the relative angle θ between the incident E-field polarization and the transition dipole moment by

(3.11)This indicates a range for r0 of −0.2 (perpendicular dipole interaction) to +0.4 (parallel dipole interaction). Anisotropy can be calculated from the measured fluorescence intensity, for example, from a population of fluorophores such as in in vitro bulk fluorescence polarization measurements. The rotational correlation time is inversely proportion to the rotational diffusion coefficient DR such that

(3.12)The rotational diffusion coefficient is given by the Stokes–Einstein relation (see Chapter 2), replacing the drag term for the equivalent rotational drag coefficient. Similarly, the mean squared angular displacement 〈θ2〉 observed after a time t relates to DR in an analogous way as for lateral diffusion:

(3.13)For a perfect sphere of radius r rotating in a medium of viscosity η at absolute temperature T, the rotational correlation time can be calculated exactly as

(3.14)Molecules that integrate into phospholipid bilayers, such as integrated membrane proteins and membrane-targeting organic dyes, often orientate stably parallel to the hydrophobic tail groups of the phospholipids such that their rotation is confined to that axis with the frictional drag approximated as that of a rotating cylinder about its long axis using the Saffman–Delbrück equations (see Saffman, 1975; Hughes, 1981). Here, the frictional drag γ of a rotating cylinder is approximated as

(3.15)We assume the viscosities of the watery environment just outside the cell membrane and just inside the cell cytoplasm (μ1 and μ2, respectively) are approximately the same, ηc (typically ~0.001–0.003 Pa·s). The dimensionless parameter ε is given by

(3.16)The viscosity of the phospholipid bilayer is given by the parameter ηm (~100–1000 times greater than ηc depending on both the specific phospholipids present and the local molecular architecture of nonlipids in the membrane). The parameter C can be approximated as

(3.17)Here c is Euler–Mascheroni constant (approximately 0.5772). The effective rotational diffusion coefficient can then be calculated in the usual way using the Stokes–Einstein relation and then the rotational correlation time is estimated.

Typical nanometer length scale fluorophores in the watery cytoplasm of cells have rotational correlation times of a few nanoseconds (ns), compared to a few microseconds (μs) in a typical phospholipid bilayer. These parameters can be measured directly using time-resolved anisotropy, with a suitable fluorescence polarization spectrometer that can typically perform sub-nanosecond sampling. The application of fluorescence anisotropy to cellular samples, typically in a culture medium containing many thousands of cells, offers a powerful method to probe the dynamics of protein complexes that, importantly, can be related back to the actual structure of the complexes (see Piston, 2010), which has an advantage over standard fluorescence microscopy methods.

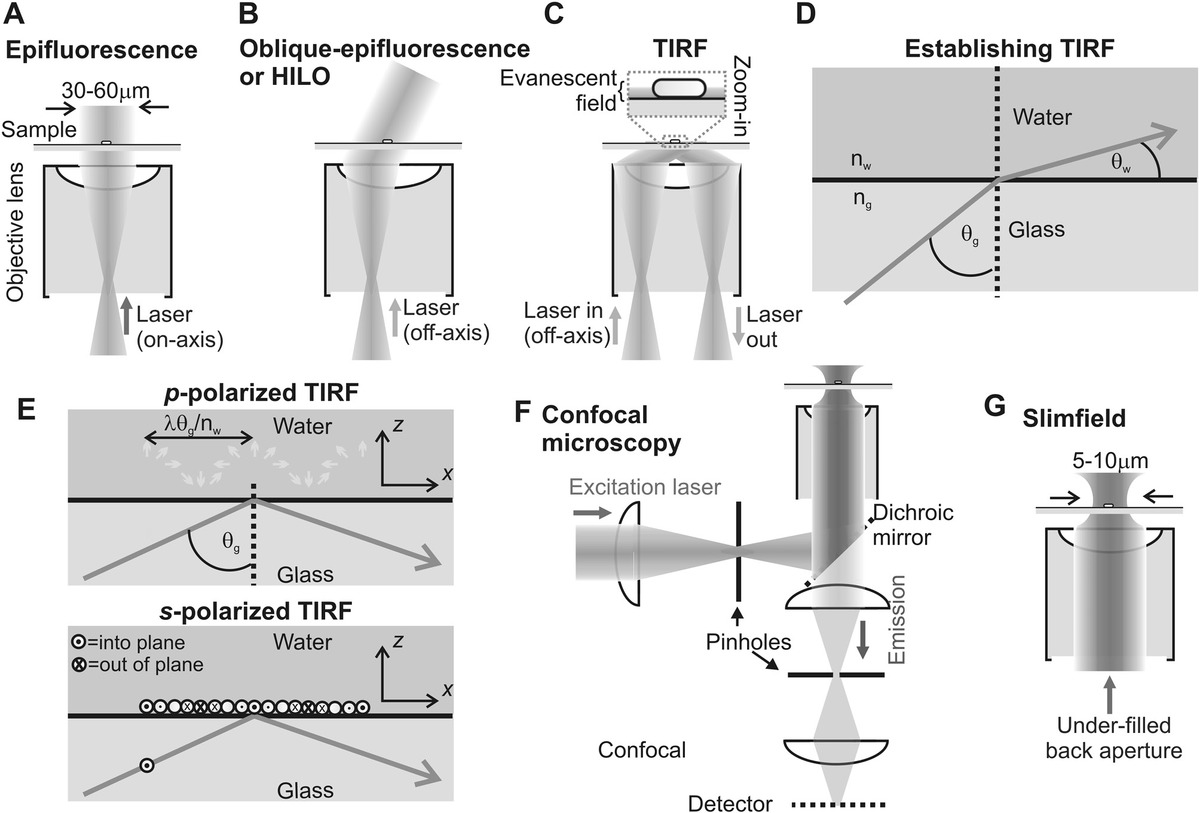

3.2.5 Optical Interferometry

There are two principal bulk in vitro sample optical interferometry techniques: dual polarization interferometry (DPI) and surface plasmon resonance (SPR). In DPI, a reference laser beam is guided through an optically transparent sample support, while a sensing beam is directed through the support at an oblique angle to the surface. This steep angle of incidence causes the beam to be totally internally reflected from the surface, with a by-product of generating an evanescent field into the sample, generally solvated by water for the case of biophysical investigations, with a characteristic depth of penetration of ~100 nm. This is an identical process to the generation of an evanescent field for total internal reflection fluorescence (TIRF) microscopy, which is discussed later in this chapter. Small quantities of material from the sample that bind to the surface have subtle but measureable effects upon polarization in this evanescent field. These can be detected with high sensitivity by measuring the interference pattern of the light that results between sensing and reference beams. DPI gives information concerning the thickness of the surface-adsorbed material and its refractive index.

SPR operates similarly in that an evanescent field is generated, but here a thin layer of metal, ~10 nm thick, is first deposited on the outside surface (usually embodied is a commercial SPR chip that can be removed and replaced as required). At a certain angle of incidence to the surface, the sensing beam reflects slightly less back into the sample due to a resonance effect via the generation of oscillations in the electrons at the metal surface interface, surface plasmons. This measured drop in reflected intensity is a function of the absolute amount of the adsorbed material on the metal surface from the sample, and so DPI and SPR are essentially complementary. Both yield information on the stoichiometry and binding kinetics of biological samples. These can be used in investigating, for example, how cell membrane receptors function; if the surface is first coated with purified receptor proteins and the sample chamber contains a ligand thought to bind to the receptor, then both DPI and SPR can be used to measure the strength of this binding, and subsequent unbinding, and to estimate the relative numbers of ligand molecules that bind for every receptor protein (Figure 3.1d).

3.2.6 Photothermal Spectroscopy

There are a group of related photothermal spectroscopy techniques that, although perhaps less popular now than toward the end of the last century due to improvements in sensitivity of other optical spectroscopy methods, are still very sensitive methods that operate by measuring the optical absorption of a sample as a function of its thermal properties. Photothermal spectroscopy is still in use to quantify the kinetics of biochemical reactions, which are initiated by light, for example, by direct photochemical reactions or by environmental changes induced by light such as changes in the cellular pH. The time scales of these processes typically span a broad time range from 10−12 to 10−3 s that are hard to obtain by using other spectroscopy methods.

Incident light that is not scattered, absorbed, or converted into fluorescence emission in optical spectroscopy is largely converted to heat in the sample. Therefore, the amount of temperature rise in an optical absorption measurement is a characteristic of the sample and a useful parameter in comparing different biological materials. Photothermal deflection spectroscopy can quantify the changes in a sample’s refractive index upon heating. It uses a laser beam probe on an optically thin sample and is useful in instances of highly absorbing biomolecule samples in solution, which have too low transmission signal to be measured accurately. Photothermal diffraction can also be used to characterize a biological material, which utilizes the interference pattern produced by multiple laser sources in the sample to generate a diffraction grating whose aperture spacing varies with the thermal properties of the sample.

In laser-induced optoacoustic spectroscopy, a ~10−9 s VIS light laser pulse incident on a sample in solution results in the generation of an acoustic pressure wave. The time evolution of the pressure pulse can be followed by high-bandwidth piezoelectric transducers, typically over a time scale of ~10−5 s, which can be related back to time-resolved binding and conformational changes in the biomolecules of sample. The technique is a tool of choice for monitoring time-resolved charge transfer interactions between different amino acids in a protein since there is no existing alternative spectroscopic technique with the sensitivity to do so.

3.3 Light Microscopy: The Basics

Light microscopy in some ways has gone full circle since its modern development in the late seventeenth and early eighteenth centuries by pioneers such as Robert Hooke (Hooke, 1665; but see Fara, 2009 for a modern discussion) and Antonj van Leeuwenhoek (see van Leeuwenhoek, 1702). In these early days of modern microscopy, different whole organisms were viewed under the microscope. With technical advances in light microscopy, and in the methods used for sample preparation, the trend over the subsequent three centuries was to focus on smaller and smaller length scale features.

3.3.1 Magnification

The prime function of a light microscope is to magnify features in a biological sample, which are illuminated by VIS light, while maintaining acceptable levels of image clarity, contrast, and exhibiting low optical aberration effects. Magnification can be performed most efficiently using a serial combination of lenses. In a very crude form, a single lens is in effect a very simple light microscope but offering limited magnification. In its most simple practical form, a light microscope consists of a high numerical aperture (NA) objective lens placed very close to the sample, with a downstream imaging lens focusing the sample image onto a highly sensitive light detector such as a high-efficiency charge-coupled device (CCD) camera, or sometimes a photomultiplier tube (PMT) in the case of a scanning system such as in confocal microscopy (Figure 3.2a). Most microscopes are either upright (objective lens positioned above the sample stage) or inverted (objective lens positioned below the sample stage).

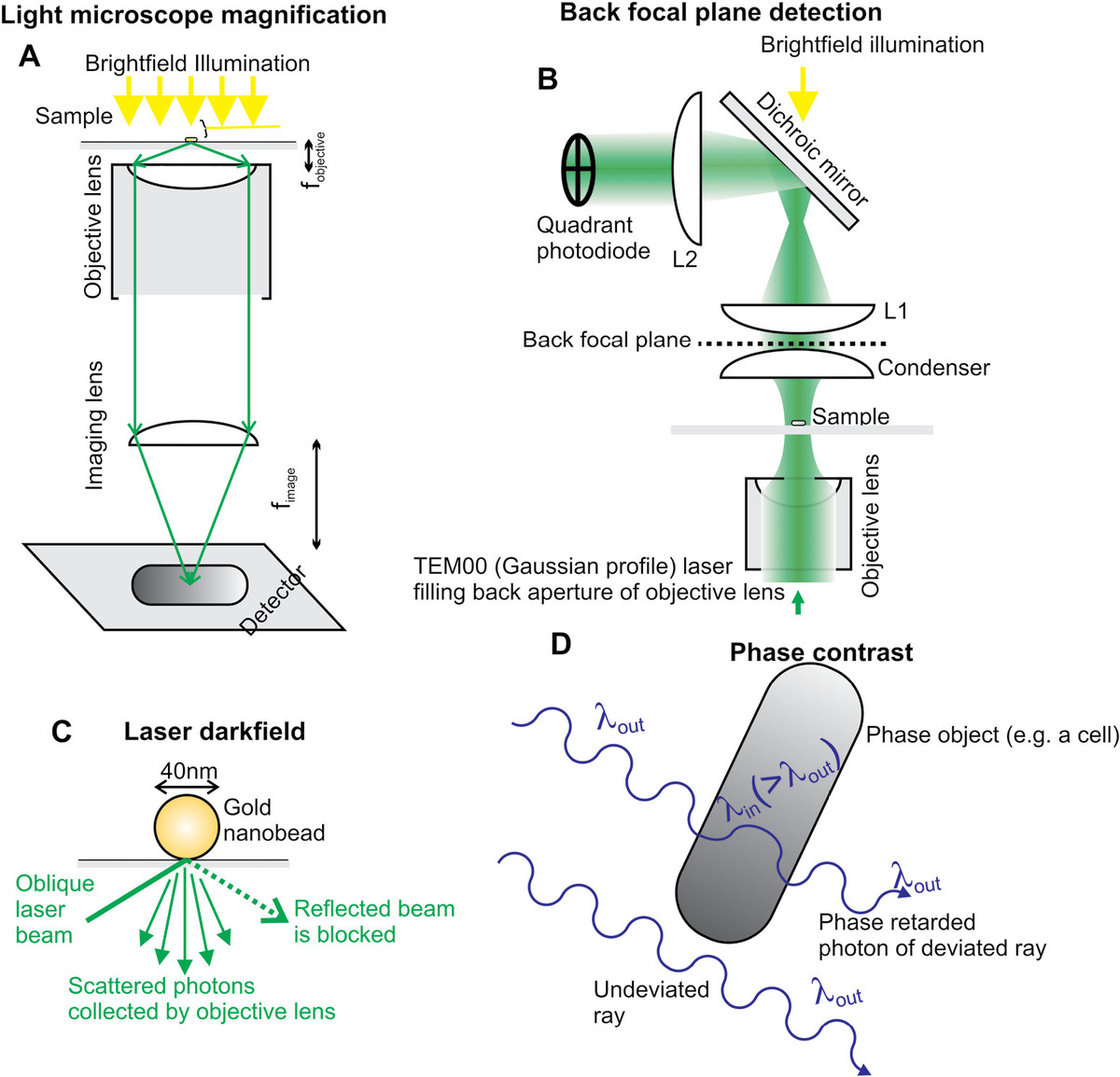

Figure 3.2 Light microscopy methods. (a) Magnification in the simplest two-lens light microscope. (b) Back focal plane detection (magnification onto quadrant photodiode is f2/f1 where fn is the corresponding focal length of lens Ln). (c) Laser dark field. (d) Phase retardation of light through a cell sample in phase contrast microscopy.

The two-lens microscope operates as a simple telescope system, with magnification M given by the ratio of the imaging lens focal length to that of the objective lens (the latter typically being a few millimeters):

(3.18)In practice, there are likely to be a series of several lens pairs placed between the imaging lens shown and the detector arranged in effect as telescopes, for example, with focal lengths f1, f2, f3, f4, …, fn corresponding to lenses placed between the objective lens and the camera detector. The magnification of such an arrangement is simply the multiplicative combination of the separate magnifications from each lens pair:

(3.19)Such additional lens pairs allow higher magnifications to be obtained without requiring a single imaging lens with an exceptionally high or low focal length, which would necessitate either an impractically large microscope or would result in severe optical aberration effects. A typical standard light microscope can generate effective total magnifications in the range 100–1000.

3.3.2 Depth of Field

The depth of field (df, also known as the “depth of focus”) is a measure of the thickness parallel to the optical axis of the microscope over which a sample appears to be in focus. It is conventionally defined as one quarter of the distance between the intensity minima parallel to the optic axis above and below the exact focal plane (i.e., where the sample in principle should be most sharply in focus) of the diffraction image that is produced by a single-point source light emitting object in the focal plane. This 3D diffraction image is a convolution of the point spread function (PSF) of the imaging system with a delta function. On this basis, df can be approximated as

(3.20)where

- λ is the wavelength of light being detected

- nm is the refractive index of the medium between the microscope objective lens or numerical aperture NA and the glass microscope coverslip/slide (either air, nm = 1, or for high-magnification objective lenses, immersion oil, nm = 1.515

- dR is the smallest length scale feature that can be resolved by the image detector (e.g., the pixel size of a camera detector) such that the image is projected onto the detector with a total lateral magnification of ML between it and the sample.

3.3.3 Light Capture from the Sample

The NA of an objective lens is defined nm sin θ, where nm is the refractive index of the imaging media. The angle θ is the maximum half-angle subtended ray of light scattered from the sample, which can be captured by the objective lens. In other words, higher NA lenses can capture more light from the sample. In air, nm = 1 so to increase the NA, further high-power objective lenses use immersion oil; a small blob of imaging oil is placed in optical contact between the glass microscope slide or coverslip and the objective lens, which has the same high value of refractive index as the glass.

The solid angle Ω subtended by this maximum half angle can be shown using simple integration over a sphere to be

(3.21)Most in vivo studies, that is, those done on living organisms or cells, are likely to be low magnification ML ~ 100 using a low numerical aperture objective lens of NA ~ 0.3 such as to encapsulate a large section of tissue on acquired images, giving a df of ~10 μm. Cellular studies often have a magnification an order of magnitude greater than this with NA values of up to ~1.5, giving a df of 0.2–0.4 μm.

Note that the human eye has a maximum numerical aperture of ~0.23 and can accommodate typical distances between ~25 cm and infinity. This means that a sample viewed directly via the eye through a microscope eyepiece unit, as opposed to imaged onto a planar camera detector, can be observed with a far greater depth of field than Equation 3.20 suggests. This can be useful in terms of visual inspection of a sample prior to data acquisition from a camera device.

3.3.4 Photon Detection at the Image Plane

The technology of photon detection in light microscopes has improved dramatically over the past few decades. Light microscopes use either an array of pixel detectors in a high-sensitivity camera, or a single detector in the form of a PMT or avalanche photodiode (APD). A PMT utilizes the photoelectric effect on a primary photocathode metal-based scintillator detector to generate a primary electron following absorption of an incident photon of light. This electrical signal is then amplified through secondary emission of electrons in the device. The electron multiplier consists of a series of up to 12 anodes (or dynodes) held at incrementally higher voltages, terminated by a final anode. At each anode/dynode, ~5 new secondary electrons are generated for each incident electron, indicating a total amplification of ~108. This is sufficient to generate a sharp current pulse, typically 1 ns, after the arrival of the incident photon, with a sensitivity of single-photon detection.

An APD is an alternative technology to a PMT. This uses the photoelectric effect but with semiconductor photon detection coupled to electron–hole avalanche multiplication of the signal. A high reverse voltage is applied to accelerate a primary electron produced following initial photon absorption in the semiconductor with sufficient energy to generate secondary electrons following impact with other regions of the semiconductor (similarly, with a highly energetic electron hole traveling in the opposite direction), ultimately generating an enormous amplification of free electron–hole pairs. This is analogous to the amplification stage in a PMT, but here the amplification occurs in the same semiconductor chip. The total multiplication of signal is >103, which is less sensitive than a PMT, however still capable of single-photon detection with an advantage of a much smaller footprint, permitting in some cases a 2D array of APDs to be made, similar to pixel-based camera detectors.

Many light microscopes utilize camera-based detection over PMT/APD detection primarily for advantages in sampling speed in not requiring slow mechanically scanning over the sample. Several standard light microcopy investigations that are not photon limited (e.g., bright-field investigations) use CCD image sensors, with the most sensitive light microscopes using electron-multiplying CCD (EMCCD) detection or complementary MOS (CMOS) technology. A CCD image sensor contains a 2D array composed of individual p-doped metal-oxide semiconductor (MOS) pixels. MOS pixels act as micron length scale capacitors with a voltage bias set just above the threshold for inversion, which thus generates electrons and holes on absorption of incoming photons.

Each MOS capacitor accumulates an electric charge proportional to the light absorbed, and control circuitry transfers this charge to its neighbor along each 1D line of the pixel, such that the last MOS capacitor in the line dumps its charge into the MOS pixel in the next line up or down, ultimately with all the charge transferred into an amplifier. This serial voltage data stream can then be subsequently reconstructed as a 2D image. A variant on the CCD includes the intensified CCD (ICCD) that comprises an initial detection step on a phosphor screen, with this phosphor light image then detect by a CCD behind it, which improves the ultimate photon detection efficiency to >90%.

Many cheaper cameras utilize a CMOS chip (these are now found ubiquitously in webcams, mobile phone cameras, and also in microprocessors in nonimaging applications). The core feature of a CMOS chip is a symmetrical back-to-back combination of n- and p-type MOS field effect transistors, requiring less additional circuitry with greater power efficiency compared to CCD pixels, manifest ultimately substantially faster imaging speeds. A scientific CMOS camera has an inferior photon collection efficiency of ~50% compared to ICCDs or EMCCDs, but can acquire data faster by an order of magnitude or more, equivalent to several thousand image frames per second.

An EMCCD utilizes a solid-state electron-multiplying step at the end of each line of CCD pixels. This amplifies relatively weak electrical signal above any readout noise that is added from the final output amplification step. This electron multiplication has normally a few hundred stages during which electrons are transferred by impact ionization, which generates multiple secondary electrons to amplify the signal. The resultant amplification is up to ~103, which compares favorably to APDs and ICCDs but with a much-reduced readout noise. EMCCDs are currently the photon detection tool of choice for low-light microscopy investigations in biology, having up to ~95% photon detection efficiency, for example, applied to single-molecule fluorescence detection, and have a reasonable sampling speed equivalent to ~1 ms per image frame for small pixel arrays of ~100 pixels of edge length, relevant to many fast biological processes.

3.4 Nonfluorescence Microscopy

Basic light microscopy is invaluable as a biophysical tool. However, its biggest weakness is poor image contrast, since most of the material in living organisms is water, on average ~60%. Since cells are surrounded by a fluid environment, which is largely water, the signal obtained from VIS light scattered from cellular object is small. However, there are several adaptations to basic light microscopy that can be applied to enhance image contrast.

3.4.1 Bright-Field and Dark-Field Microscopy

Bright-field microscopy relies on measuring the differences in the absorbed or scattered intensity of light as it passes through different features of the sample. Incident light is generated usually from either a tungsten halogen filament broadband source or a bright LED, which is captured by short focal length collector lens. Light is then directed through a condenser lens using a Köhler illumination design that involves forming an image of the light source in the back focal plane of the condenser (Figure 3.2b). This results in a collimated beam incident on the sample and a uniform illumination intensity in the focal plane of the microscope.

A cell on a microscope coverslip/slide whose shape is broadly symmetrical on either side of a plane parallel to a focal plane taken through its midheight will exhibit minimal bright-field image contrast between the foreground cellular material and the background cell media. A simple approach to increase the contrast for the outline of the cell is to use defocusing microscopy. Negative defocusing, for which the focal plane is moved below the midheight level of the cell closer to the object lens, generates an image of a dark cell body with a higher-intensity cell perimeter, while positive defocusing generates the inverse of this, due to light interference at the image plane between undeviated and transmitted light beams whose optical path length (OPL), which is the product of the geometrical path length of the light beam with the index of refraction in that optical media, depends upon the extent of defocus. The contrast at the cell perimeter is a function of the radius of curvature of the cell, and for cells of a micron length scale a defocus value of a few hundred nanometers generates optimum perimeter contrast.

Another approach to improve contrast is to tag a biomolecule with a reporter probe designed to generate a high local signal upon excitation with light, for example, a probe coated in a high atomic number metal such as gold generates a high scatter signal for certain wavelengths of VIS light. Here, photon scattering is elastic and so the wavelength of scattered light is the same as the incident light; thus, any scattered light not from the labeled biomolecules must be blocked. These include back reflections from the glass microscope coverslip/slide. The regions of the sample not containing tagged biomolecules appear dark on the camera detector, hence the name dark-field microscopy. In transmitted light dark field, a modified condenser lens blocks out the central aperture resulting in highly oblique illumination on the sample; nonscattered light will emerge at too steep an angle to be captured by the objective lens, whereas light diffracted by the sample will be forward scattered at small angles and can be captured.

A similar approach can be used with reflected light, in general using a laser source (hence laser dark field) in which an oblique angled laser beam incident on the sample emerging from the objective lens is either transmitted through the coverslip in the absence of any sample, or back scattered by the sample back into the objective lens (Figure 3.2c). An additional enhancement of contrast can be achieved by the generation of surface plasmons, whose intensity is a function of the particle size (a few tens of nanometers) and the laser wavelength. This can generate very high SNRs on in vitro samples facilitating extremely high time resolutions of ~10−6 s.

Dark-field microscopy has more limited use with living samples than bright-field microscopy because the relatively large size of a cell compared to the scatter signal either from a native unlabeled biomolecule or from a biomolecule that has been labeled using a dark-field probe (e.g., a gold-coated bead of tens of nanometers in diameter) can result in significant scatter from the cell body itself, which can swamp the probe signal. The scatter signal from unlabeled biomolecules can be prohibitively small, but using a scatter label can also present technical challenges; it is not easy to specifically label biomolecules inside living cells with, for example, a gold nanoscale bead without nonspecific labeling of other cellular structures.

For certain cell types (e.g., prokaryotes), it is also difficult to introduce such a large scatter probe while still keeping the cell intact, limiting its application to accessible surface features. The practical lower size limit to detect a reproducible, measurable signal from the scattered light is a few tens of nanometers; the size of the probe is large compared to single biomolecules implying some steric hindrance effects with impairment of normal biological operation. Note that there are advanced new techniques such as interferometric scattering microscopy, which use interferometric methods of scattered light detection that can be used to detect the scattered signal directly from unlabeled biomolecules themselves (see Chapter 4).

3.4.2 Contrast Enhancement Using Optical Interference

Optical interference techniques can be used to modify a light microscope to increase image contrast. Phase contrast microscopy utilizes differences in refractive index inside a biological sample. Optically, transparent cells and tissues exhibit a range of refractive indices nt as a function of spatial localization across the tissue, 1.35–1.45 being typical for cellular material, which compares with that of water, nw ~ 1.33. The result is that the phase of light propagating through a region of sample, which has a length parallel to the optic axis Δz of a few microns, will be retarded. Such objects are examples of phase objects. The retardation for many cells is roughly around a quarter of a wavelength λ relative to that of light passing through the largely aqueous environment in between cells (Figure 3.2d). It is trivial to derive the following relationship by comparing the speed of light in water to that inside a cell or tissue on the basis of differences in refractive indices:

(3.22)An annulus aperture in the front focal plane of the condenser lens, similar to that used for dark-field forward scatter microscopy in blocking out the central aperture of illumination, generates a cone of collimated light onto the sample. Emergent light transmitted through the sample is collected by an objective lens consisting of both undeviated light (since the angle of the cone of light is not as oblique as that used in dark-field microscopy) that has not encountered any biological material and diffracted (forwarded scattered) light that has exhibited a relative phase retardation to the undeviated light.

A phase ring in the back focal plane of the objective lens, in a conjugate image plane to the condenser annulus, converts this retardation into a half wavelength phase shift, a condition for destructive interference, either by introducing a half wavelength phase increase in the ring (positive phase contrast microscopy) by having an extra thickness of glass, for example, in which case the background appears darker relative to the foreground sample, or more commonly by introducing a further half wavelength phase retardation in the ring (negative phase contrast microscopy) by indenting the glass in that region, in which case the sample appears brighter relative to the background, or by coating the ring in a thin layer of aluminum.

In other words, this process transforms phase information at the sample into amplitude contrast in the intensity of the final image. The length scale of a few microns over which the retardation of the light is typically a quarter of a wavelength is comparable to some small cells in tissues, as well as cellular organelle features such as the nucleus and mitochondria. It is therefore ideal for enhancing the image contrast of cellular components.

Polarized light microscopy can increase the relative contrast of birefringent samples. Birefringence, as discussed for polarization spectroscopy techniques in Section 3.2.4, occurs when a sample has a refractive index which is dependent upon the orientation of the polarization E-field vector of the incident light. This is often due to repeating structural features in a sample, which have a spatial periodicity over a length scale comparable to, or less than, the wavelength of the light, which is true for several biological structures. In other words, this is a characteristic of certain crystals or more relevant for biological samples due to the fluidity of the water-solvent environment and other fluidic structures such as phospholipid bilayers, liquid crystals.

There are several examples of birefringent biological liquid crystals. These include fibrous proteins with well-defined spatial periodicity between bundles of smaller fibrils such as collagen in the extracellular matrix, cell membranes and certain proteins in the cell membranes, cytoskeletal proteins, structural proteins in the cell walls of plants (e.g., cellulose) and certain bacteria (e.g., proteoglycans), and the highly periodic protein capsid coats of viruses. Polarization microscopy is an excellent tool for generating images of these biological liquid crystal features, and there are also examples of nonliquid crystalline biomolecule samples that can be investigated similarly (e.g., crystalline arrays of certain vitamins).

For polarization microscopy, a polarizer is positioned in the illumination path between the VIS light source and a condenser lens, before the sample, and a second polarizer described as an analyzer is positioned after the transmitted light has emerged from the sample, close to the back aperture of the objective lens. The transmitted light through a birefringent sample can be split into two orthogonally polarized light components of p and s, which are either parallel to the plane of the optic axis or perpendicular to it, respectively. The speed of the light in each of these separate components is different due to the polarization dependence of the refractive index in the sample. These components therefore become out of phase with each other but are recombined with various combinations of constructive and destructive interference during their passage through the analyzer, depending upon the relative position on the sample, which is then imaged onto a camera (or viewed through eyepieces) in the normal way for basic light microscopy. Polarized light microscopy can quantify the precise amount of retardation that occurs in each polarization direction and thus generates information about the relative orientation of spatially periodic molecular structures of the birefringent sample. Some of these structures are involved in mechanical features of tissues and cells, and thus polarization microscopy can be used to probe biomechanics (see Chapter 6).

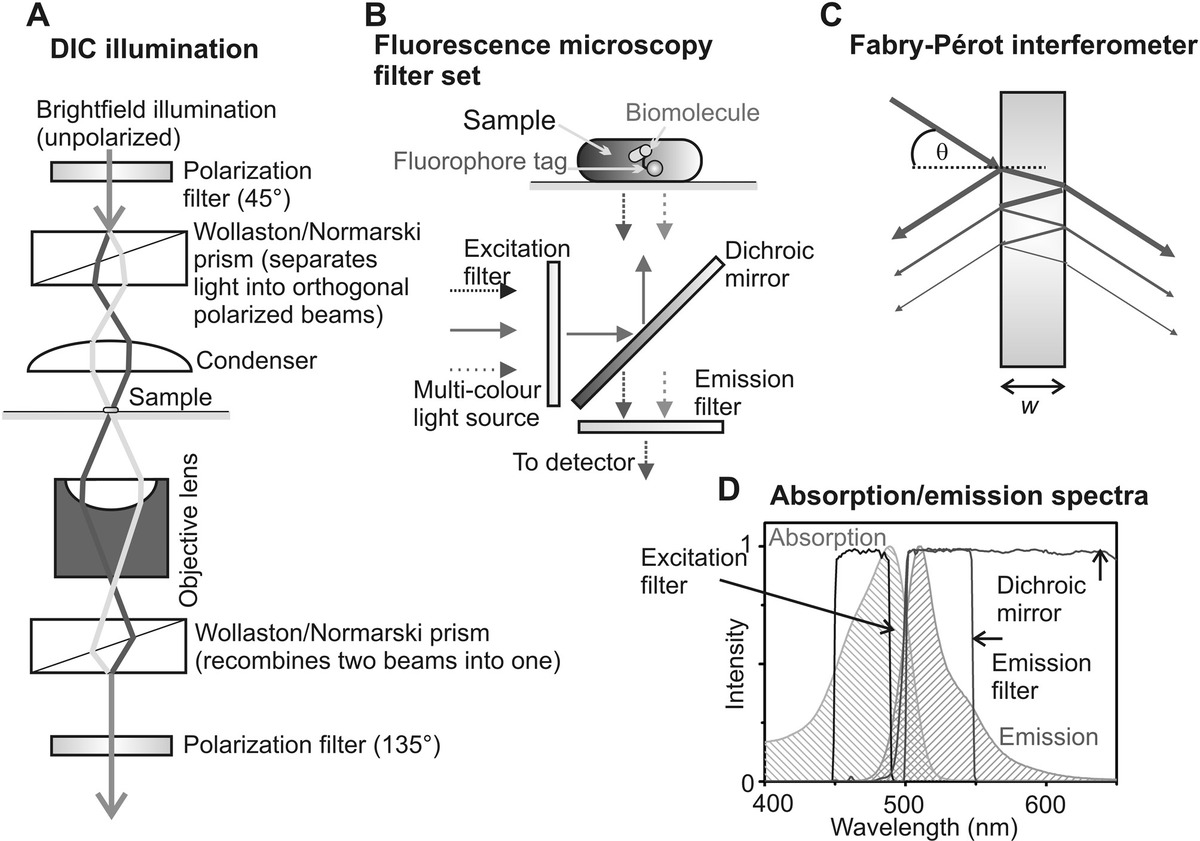

Differential interference contrast (DIC) microscopy is a related technique to polarization microscopy, in using a similar interference method utilizing polarized light illumination on the sample. A polarizer again is placed between the VIS light source and condenser lens, which is set at 45° relative to the optical axis of an additional birefringent optical component of either a Wollaston prism or a Nomarski compound prism, which is positioned in the beam path in the front focal plane of the condenser lens. These prisms both generate two transmitted orthogonally polarized rays of light, referred to as the “ordinary ray” (polarized parallel to the prism optical axis) and the “extraordinary ray,” thus with polarization E-field vectors at 0°/90° relative to the prism optic axis. These two rays emerge at different angles relative to the incident light (which is thus said to be sheared), their relative angular separation called the “shear angle,” due to their different respective speeds of propagation through the prism (Figure 3.3a).

Figure 3.3 Generating image contrast in light microscopy. (a) Schematic of differential interference contrast illumination. (b) Typical fluorescence microscopy filter set. (c) Wavelength selection using a Fabry–Perot interferometer design. (d) Absorption and emission spectra for GFP, with overlaid excitation and emission filters and dichroic mirror in GFP filter set.

These sheared rays are used to form a separate sample ray and reference ray, which are both then transmitted through the sample. After passing through the sample and objective lens, both transmitted sheared rays are recombined by a second matched Wollaston/Nomarski prism positioned in a conjugate image plane to the first, with the recombined beam then transmitted through a second polarizer analyzer oriented to transmit light polarized at 135°. The difference in OPLs of the sample and reference beam results in an interference pattern at the image plane (normally a camera detector), and it is this wave interference that creates contrast.

Since the sample and reference beams emerge at different angles from the first Wollaston/Nomarski prism, they generate two bright-field images of orthogonal polarization that are laterally displaced from each other by typically a few hundred nanometers, with corresponding regions of the two images resulting from different OPLs, or phases. Thus, the resultant interference pattern depends on the variations of phase between lateral displacements of the sample, in other words with the spatial gradient of refractive index of across a biological sample. It is therefore an excellent technique for identifying the boundaries of cells and also of cell organelles.

A related technique to DIC using polarized illumination is Hoffmann modulation contrast (HMC) microscopy. HMC systems consist of a condenser and objective lens, which have a slit aperture and two coupled polarizers instead of the first Wollaston/Nomarski prism and polarizer of DIC, and a modulator filter in place of the second Wollaston/Nomarski prism, which has a spatial dependence on the attenuation of transmitted light. This modulator filter has usually three distinct regions of different attenuation, with typical transmittance values of T = 100% (light), 15% (gray), and 1% (dark). The condenser slit is imaged onto the gray zone of the modulator. In regions of the sample where there is a rapid spatial change of sample optical path, refraction occurs, which deviates the transmitted light path. The refracted light will be attenuated either more or less in passing through the modulator filter, resulting in an image whose intensity values are dependent on the spatial gradient of the refractive index of the sample, similar to DIC. HMC has an advantage over DIC in that it can be used with birefringent specimens, which would otherwise result in confusing images in DIC, but has a disadvantage in that DIC can utilize the whole aperture of the condenser resulting in higher spatial resolution information from the transmitted light.

Quantitative phase imaging (QPI) (Popescu, 2011) utilizes the same core physics principles as phase microscopy but renders a quantitative image in which each pixel intensity is a measure of the absolute phase difference between the scattered light from a sample relative to a reference laser beam and has the same advantages of being label-free and thus less prone to potential physiological artifacts due to the presence of a contrast-enhancing label such as a fluorescent dye. It can thus in effect create a map of the variation of the refraction index across a sample, which is a proxy for local biomolecular concentration—for example, as a metric for the spatial variation of biomolecular concentration across a tissue or in a single cell. 2D and 3D imaging modalities exist, with the latter also referred to as holotomograpy. The main drawback of QPI is the lack of specificity since it is non-trivial to deconvolve the respective contributions of different cellular biomolecules to the measured refractive index. To mitigate this issue, QPI can be also combined with other forms of microscopy such as fluorescence microscopy in which specific fluorescent dye labeling can be used with multicolor microscopy to map out the spatial distribution of several different components (see section 3.5.3), while QPI can be used to generate a correlated image of the total biomolecular concentration in the same region of the cell or tissue sample. Similarly, QPI can be correlated with several other light microscopy techniques, for example including optical tweezers (see Chapter 6).

3.4.3 Digital Holographic Microscopy

Digital holographic microscopy is emerging as a valuable tool for obtaining 3D spatial information for the localization of swimming cells, for example, growing cultures of bacteria, as well as rendering time-resolved data for changes to cellular structures involved in cell motility during their normal modes of action, for example, flagella of bacteria that rotate to enable cells to swim by using a propeller type action, and similarly cilia structures of certain eukaryotic cells. The basic physics of hologram formation involves an interference pattern between a laser beam, which passes through (or some variant of the technique is reflected from) the sample, and a reference beam split from the same coherent source that does not pass through the sample. The sample beam experiences phase changes due to the different range of refractive indices inside a cell compared to the culture medium outside the cell, similar to phase contrast microscopy. The interference pattern formed is a Fourier transform of the 3D variation of relative phase changes in the sample and therefore the inverse Fourier transform can render 3D cellular localization information.

Several bespoke digital holographic systems have been developed for studying dense cultures of swimming cells in particular to permit the investigation of biological physics features such as hydro-dynamic coupling effects between cells as well as more physical biology effects such as signaling features of swimming including bacterial chemotaxis, which is the method by which bacteria use a biased random walk to swim toward a source of nutrients and away from potential toxins. Some systems do not require laser illumination but can function using a relatively cheap LED light source, though they require in general an expensive camera that can sample at several thousand image frames per second in order to obtain the time-resolved information required for fast swimming cells and rapid structural transitions of flagella or cilia.

3.5 Fluorescence Microscopy: The Basics

Fluorescence microscopy is an invaluable biophysical tool for probing biological processes in vitro, in live cells, and in cellular populations such as tissues. Although there may be potential issues of phototoxicity as well as impairment of biological processes due to the size of fluorescent “reporter” tags, fluorescence microscopy is the biophysical tool of choice for investigating native cellular phenomena in particular, since it provides exceptional detection contrast for relatively minimal physiological perturbation compared to other biophysical techniques. It is no surprise that the number of biophysical techniques discussed in this book is biased toward fluorescence microscopy.

3.5.1 Excitation Sources

The power of the excitation light from either a broadband or narrow bandwidth source may first require attenuation to avoid prohibitive photobleaching, and related photodamage, of the sample. Neutral density (ND) filters are often used to achieve this. These can be either absorptive or reflective in design, which attenuate uniformly across the VIS light spectrum, with the attenuation power of 10ND where ND is the neutral density value of the filter. Broadband sources, emitting across the VIS light spectrum, commonly include the mercury arc lamp, xenon arc lamp, and metal–halide lamp. These are all used in conjunction with narrow bandwidth excitation filters (typically 10–20 nm bandwidth spectral window), which select specific regions of the light source spectrum to match the absorption peak of particular fluorophores to be used in a given sample.

Narrow bandwidth sources include laser excitation, with an emission bandwidth of around a nanometer. Bright LEDs can be used as intermediate bandwidth fluorescence excitation source (~20–30 nm spectral width). Broadband lasers, the so-called white-light supercontinuum lasers, are becoming increasingly common as fluorescence excitation sources in research laboratories due to reductions in cost coupled with improvements in power output across the VIS light spectrum. These require either spectral excitation filters to select different colors or a more dynamic method of color selection such as an acousto-optic tunable filter (AOTF).

The physics of AOTFs is similar to those of the acousto-optic deflector (AOD) used, for example, in many optical tweezers (OT) devices to position laser traps and are discussed in Chapter 5. Suffice to say here that an AOTF is an optically transparent crystal in which a standing wave can be generated by the application of radio frequency oscillations across the crystal surface. These periodic features generate a predictable steady-state spatial variation of refractive index in the crystal, which can act in effect as a diffraction grating. The diffraction angle is a function of light’s wavelength; therefore, different colors are spatially split.

The maximum switching frequency for an AOTF (i.e., to switch between an “off” state in which the incident light is not deviated, and an “on” state in which it is deviated) is several tens of MHz; thus, an AOTF can select different colors dynamically, more than four orders of magnitude faster than the sampling time of a typical fluorescence imaging experiment, though the principal issues with an AOTF is a drop in output power of >30% in passing through the device and the often prohibitive cost of the device.

3.5.2 Fluorescence Emission

The difference in wavelength between absorbed and emitted light is called the Stokes shift. The full spectral emission profile of a particular fluorophore, φEM(λ), is the relation between the intensity of fluorescence emission as a function of emission wavelength normalized such that the integrated area under the curve is 1. Similarly the spectral excitation profile of a particular fluorophore, φEX(λ), represents the variation of excitation absorption as a function of incident wavelength, which looks similar to a mirror image of the φEM(λ) profile offset by the Stokes shift.

A typical fluorescence microscope will utilize the Stokes shift by using a specially coated filter called a dichroic mirror, usually positioned near the back aperture of the objective lens in a filter set consisting of a dichroic mirror, an emission filter, and, if appropriate, an excitation filter (Figure 3.3b). The dichroic mirror reflects incident excitation light but transmits higher wavelength light, such as that from fluorescence emissions from the sample. All samples also generate elastically scattered light, whose wavelength is identical to the incident light. The largest source of elastic back scatter is usually from the interface between the glass coverslip/slide on which the sample is positioned and the water-based solution of the tissue often resulting in up to ~4% of the incident excitation light being scattered back from this interface. Typical fluorescent samples have a ratio of emitted fluorescence intensity to total back scattered excitation light of 10−4 to 10−6. Therefore, the dichroic mirror ideally transmits less than a millionth of the incident wavelength light.

Most modern dichroic mirrors operate as interference filters by using multiple etalon layers of thin films of dielectric or metal of different refractive indices to generate spectral selectivity in reflectance and transmission. A single etalon consists of a thin, optically transparent, refractive medium, whose thickness w is less than the wavelength of light, which therefore results in interference between the transmitted and reflected beams from each optical surface (a Fabry–Pérot interferometer operates using similar principles). With reference to Figure 3.3c, the phase difference Δφ between a pair of successive transmitted beams is

(3.23)where

- λ is the free-space wavelength

- n is the refractive index of the etalon material

The finesse coefficient F is often used to characterize the spectral selectivity of an etalon, defined as

(3.24)where R is the reflectance, which is also given by 1 – T where T is the transmittance, assuming no absorption losses. By rearrangement

(3.25)A maximum T of 1 occurs when the OPL difference between successive transmitted beams, 2nw cos θ, is an integer number of wavelengths; similarly the maximum R occurs when the OPL equals half integer multiples of wavelength. The peaks in T are separated by a width Δλ known as the free spectral range. Using Equations 3.23 through 3.25, Δλ is approximated as

(3.26)where λpeak is the wavelength of the central T peak. The sharpness of each peak in T is measured by the full width at half maximum, δλ, which can be approximated as

(3.27)Typical dichroic mirrors may have three or four different thin film layers that are generated by either evaporation or sputtering methods in a vacuum (see Chapter 7) and are optimized to work at θ = 45°, the usual orientation in a fluorescence microscope.

The transmission function of a typical VIS light dichroic mirror, TD(λ) is thus typically <10−6 for λ < (λcut-off − Δλcut-off/2) and more likely 0.90–0.99 for λ > (λcut-off + Δλcut-off/2), up until using the VIS light maximum wavelength of ~750 nm, where λcut-off is the characteristic cutoff wavelength between lower wavelength high attenuation and higher wavelength high transmission, which is usually optimized against the emission spectra of a particular fluorescent dye in question. The value Δλcut-off is a measurement of the sharpness of this transition in going from very high to very low attenuation of the light, typically ~10 nm.

In practice, an additional fluorescence emission filter is applied to transmitted light, bandpass filters such that their transmission function TEM(λ) is <10−8 for λ < (λmidpoint – Δλmidpoint/2) and for λ > (λmidpoint + Δλmidpoint/2) and for λ between these boundaries is more likely 0.90–0.99, where λmidpoint is the midpoint wavelength of the band-pass window and Δλmidpoint is the bandwidth of the window, ~10–50 nm depending on the fluorophore and imaging application (Figure 3.3d).

The fluorescence quantum yield (Φ) gives a measure of the efficiency of the fluorescence process as the ratio of emitted photons to photons absorbed, given by

(3.28)where

- ks is the spontaneous rate of radiative emission

- Φi and ki are the individual efficiencies and rates, respectively, for the various decay processes of the excited state (internal conversion, intersystem crossing, phosphorescence)

The fluorescence emission intensity IEM from a given fluorophore emitting isotropically (i.e., with equal probability in all directions), which is detected by a camera, can be calculated as

(3.29)where

- Iabs is the total absorbed light power integrated over all wavelengths

- Ω is the collection angle for photons of the objective lens

- TOTHER is a combination of all of the other transmission spectra of the other optical components on the emission path of the microscope

- Ecamera is the efficiency of photon detection of the camera

The total SNR for fluorescence emission detection is then

(3.30)where NEM is the number of detected fluorescence emission photons per pixel, with their summation being over the extent of a fluorophore image consisting of n pixels in total (i.e., after several capture and transmission losses, through the microscope) from a camera whose gain is G (i.e., for every photon detected the number of electron counts generated per pixel will be G) and readout noise per pixel is NCAM, with NEX photons transmitted over an equivalent region of the camera over which the fluorophore is detected.

3.5.3 Multicolor Fluorescence Microscopy

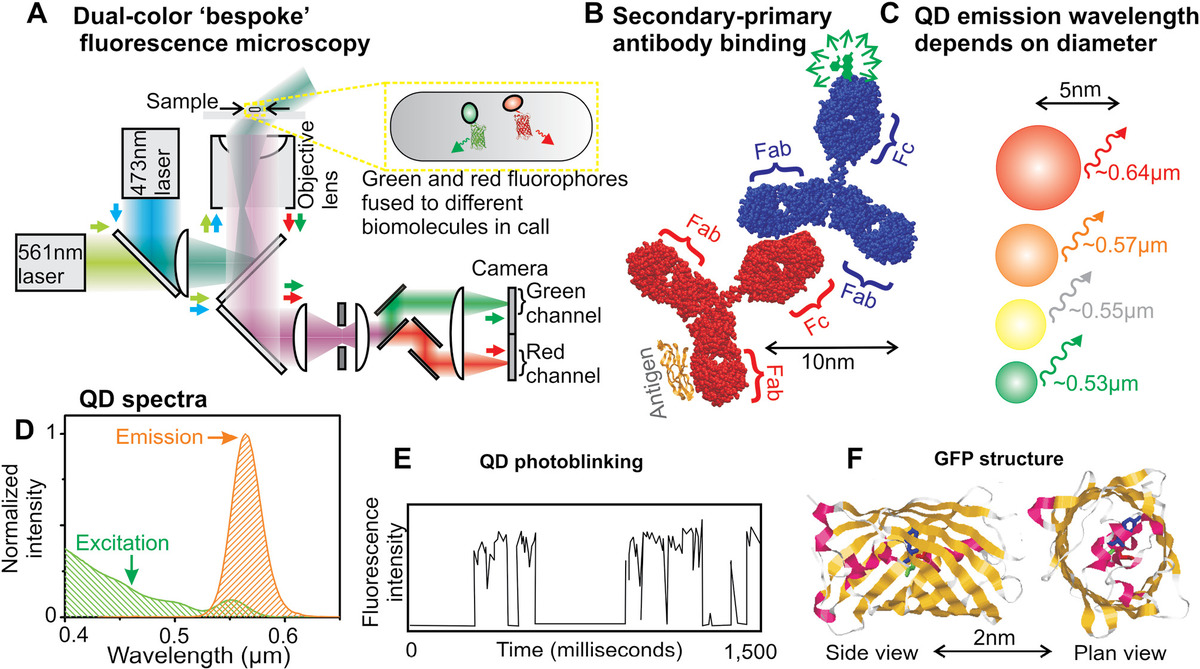

A useful extension of using a single type of fluorophore for fluorescence microscopy is to use two or more different fluorophore types that are excited by, and which emit, different characteristic ranges of wavelength. If each different type of fluorophore can be tagged onto a different type of biomolecule in an organism, then it is possible to monitor the effects of interaction between these different molecular components and to see where each is expressed in the organism at what characteristic stages in the lifecycle and how different effects from the external environment influence the spatial distributions of the different molecular components. To achieve this requires splitting the fluorescence emission signal from each different type of fluorophore onto a separate detector channel.

The simplest way to achieve this is to mechanically switch between different fluorescence filter sets catered for the different respective fluorophore types and acquire different images using the same region of sample. One disadvantage with this is that the mechanical switching of filter sets can judder the sample, and this coupled to the different filter set components being very slightly out alignment with each other can make it more of a challenge to correctly coalign the different color channel images with high accuracy, necessitating acquiring separate bright-field images of the sample for each different filter set to facilitate correct alignment (see Chapter 8).

A more challenging issue is that there is a time delay between mechanically switching filter sets, at least around a second, which sets an upper limit on the biological dynamics that can be explored using multiple fluorophore types. One way round this problem is to use a specialized multiple band-pass dichroic mirror in the filter set, which permits excitation and transmission of multiple fluorophore types, and then using one more additional standard dichroic mirrors and single band-pass emission filters downstream from the filter set to then split the mixed color fluorescence signal, steering each different color channel to a different camera, or onto different regions of the same camera pixel array (Figure 3.4a). Dual and sometimes triple-band dichroic mirrors are often used. The main issue with having more bands is that since the emission spectrum of a typical fluorophore is often broad, each additional color band results in losing some photons to avoid cross talk between different bands by bleed-through of the fluorescence signal from one fluorophore type into the detection channel of another fluorophore type. Having sufficient brightness in all color channels sets a practical limit on the number of channels permitted, though quantum dots (QDs) have much sharper emission spectra compared to other types of fluorophores and investigations can be performed potentially using up to seven detection bands across the VIS and near IR light spectrum.

Figure 3.4 Fluorophores in biophysics. (a) Bespoke (i.e., homebuilt) dual-color fluorescence microscope used in imaging two fluorophores of different color in a live cell simultaneously. (b) Fluorescently labeled secondary antibody binding to a specific primary antibody. (c) Dependence of QD fluorescence emission wavelength on diameter. (d) Normalized excitation (green) and emission (orange) spectra for a typical QD (peak emission 0.57 μm). (e) Example fluorescence intensity time trace for a QD exhibiting stochastic photoblinking. (f) Structure of GFP showing beta strands (yellow), alpha helices (magenta), and random coil regions (gray).

3.5.4 Photobleaching of Fluorophores

Single-photon excitation of a bulk population of photoactive fluorophores of concentration C(t) at time t after the start of the photon absorption process follows the first-order bleaching kinetics, which is trivial to demonstrate and results in